PhD defence, Mercedes García Martínez

Date : 27/03/2017

Hour : 14h00

Localisation : amphitheatre, building IC2, LIUM, Université du Mans

Title : Factored Neural Machine Translation

Composition of the jury :

Reviewers :

– Marcello FEDERICO, Professor, Fondazione Bruno Kessler, Trento

– Alexandre ALLAUZEN, Lecturer HDR, LIMSI-CNRS, Université Paris-Sud

Examiners :

– Lucia SPECIA, Professeur, University of Sheffield

– Francisco CASACUBERTA, Professor, Universitat Politècnica de València

Supervisor : Yannick ESTÈVE, Professor, LIUM, Le Mans Université

Co-supervisor : Loïc BARRAULT, Lecturer, LIUM, Le Mans Université

Co-advisor : Fethi BOUGARES, Lecturer, LIUM, Le Mans Université

Abstract :

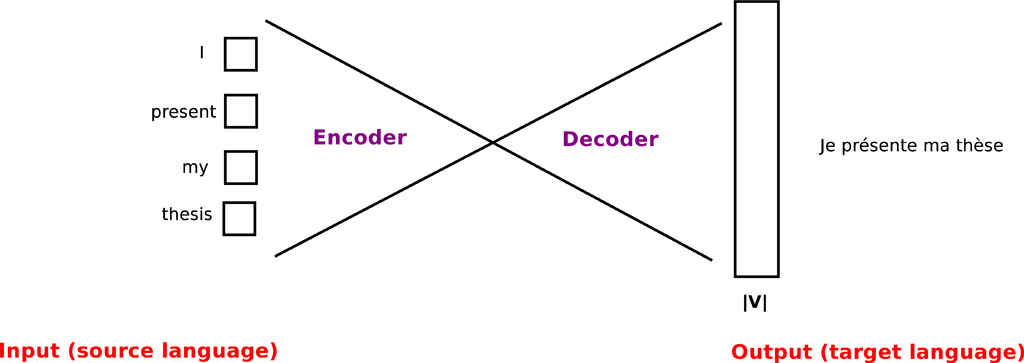

Communication between humans across the lands is difficult due to the diversity of languages. Machine translation is a quick and cheap way to make translation accessible to everyone. Recently, Neural Machine Translation (NMT) has achieved impressive results. This thesis is focus on the Factored Neural Machine Translation (FNMT) approach which is founded on the idea of using the morphological and grammatical decomposition of the words (lemmas and linguistic factors) in the target language. This architecture addresses two well-known challenges occurring in NMT.

Firstly, the limitation on the target vocabulary size which is a consequence of the computationally expensive softmax function at the output layer of the network, leading to a high rate of unknown words. Secondly, data sparsity which is arising when we face a specific domain or a morphologically rich language. With FNMT, all the inflections of the words are supported and larger vocabulary is modelled with similar computational cost. Moreover, new words not included in the training dataset can be generated. In this work, I developed different FNMT architectures using various dependencies between lemmas and factors. In addition, I enhanced the source language side also with factors. The FNMT model is evaluated on various languages including morphologically rich ones. State of the art models, some using Byte Pair Encoding (BPE) are compared to the FNMT model using small and big training datasets. We found out that factored models are more robust in low resource conditions. FNMT has been combined with BPE units performing better than pure FNMT model when trained with big data. We experimented with different domains obtaining improvements with the FNMT models. Furthermore, the morphology of the translations is measured using a special test suite showing the importance of explicitly modeling the target morphology. Our work shows the benefits of applying linguistic factors in NMT.

Key words :

Neural Machine Translation, Factored models, Deep Learning, Neural Networks, Machine Translation

Français

Français