Multilingual speech translation under resource constraints

Supervisor: Antoine Laurent

Supervisor: Antoine Laurent

Host team: LIUM – LST

Localization: Le Mans

Contact: Antoine.Laurent(at)univ-lemans.fr

Context : This postdoc is in the field of automatic natural language processing (NLP) and more particularly automatic speech translation under resource constraints. It’s a part of the DGA Rapid project COMMUTE.

Objectives: The postdoctoral researcher will be responsible for conducting competitive research in the field of speech translation.

Self-Supervised Representation Learning (SSRL) from speech [Harwath et al., 2016, Hsu et al., 2017, Khurana et al., 2019, Pascual et al., 2019, Schneider et al., 2019, Baevski et al., 2020a, Chung and Glass, 2020, Khurana et al., 2020, Baevski et al., 2020b, Harwath et al., 2020, Conneau et al., 2020, Liu et al., 2021b, Hsu et al., 2021, Chung et al., 2021, Babu et al., 2021, Liu et al., 2021a, Khurana et al., 2022, Bapna et al., 2022] has improved tremendously over the past few years due to the introduction of Contrastive Predictive Coding (CPC) [Oord et al., 2018], a self-supervised representation learning method applied to speech, text, and visual data. The introduction of the core idea of noise contrastive estimation [Gutmann and Hyvärinen, 2010] in CPC has led to a series of papers in speech SSRL, such as Wav2Vec [Schneider et al., 2019], VQ-Wav2Vec [Baevski et al., 2020a], Wav2Vec-2.0 [Baevski et al., 2020b], Multilingual Wav2Vec-2.0 [Conneau et al., 2020], XLS-R (XLS-R, a bigger version of the multilingual wav2vec-2.0) [Babu et al., 2021]. Pretrained SSRL speech encoders like XLS-R are considered “foundation models” [Bommasani and et. al., 2021] for downstream multilingual speech processing applications such as Multilingual Automatic Speech Recognition [Conneau et al., 2020, Rivière et al., 2020, Babu et al., 2021], Multilingual Speech Translation [Li et al., 2020, Babu et al., 2021, Bapna et al., 2022], and other para-linguistic property prediction tasks [Shor et al., 2021, wen Yang et al., 2021]. This work focuses on Multilingual Speech Translation.

The standard neural network architecture used for MST is the encoder-decoder model [Sutskever et al., 2014, Vaswani et al., 2017]. Recently, MST has seen significant improvements owing to; (i) better initialization of the translation model’s encoder and decoder with pre-trained speech encoders, like XLS-R [Babu et al., 2021], and text decoders, like MBART [Liu et al., 2020], (ii) better fine-tuning strategies [Li et al., 2020], and (iii) parallel speech-text translation corpora [Iranzo-Sánchez et al., 2019, Wang et al., 2020]. However the performance on low-resource tasks remains poor, and in particular, the performance gap (cross-lingual transfer gap) between high and low-resource languages remains large. We hypothesize that this is because the XLS-R speech encoder learns non-robust surface-level features from unlabeled speech data, rather than the high-level linguistic knowledge about semantics.

To inject semantic knowledge into the learned XLS-R representations, we turn to the recently introduced Semantically-Aligned Multimodal Cross-Lingual Representation Learning framework, SAMU-XLS-R [Khurana et al., 2022]. SAMU-XLS-R is a knowledge-distillation framework that distills semantic knowledge from a pre- trained text embedding model into the pre-trained multilingual XLS-R speech encoder.

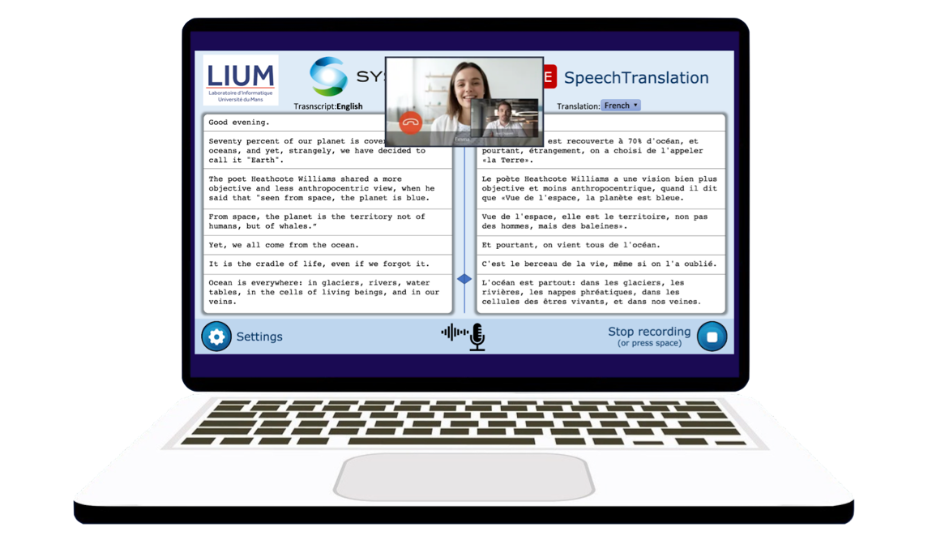

The goal of this work is to improve this framework, and also to come up with a new model that allows to process the audio in streaming. Results of the work will be integrated in a demonstrator.

Organization :The work will be carried out at LIUM. The postdoc will have access to the laboratory servers to carry out his research. The salary will be around €42,000 gross/year, for a period of 1 year, renewable once.

Application:

Send CV and cover letter to Antoine Laurent before October 31, 6 pm (Antoine.Laurent(at)univ-lemans.fr)

References :

- [Babu et al., 2021] Babu, A., Wang, C., Tjandra, A., Lakhotia, K., Xu, Q., Goyal, N., Singh, K., von Platen, P., Saraf, Y., Pino, J., Baevski, A., Conneau, A., and Auli, M. (2021). Xls-r: Self-supervised cross-lingual speech representation learning at scale. arXiv:2111.09296.

- [Baevski et al., 2020a] Baevski, A., Schneider, S., and Auli, M. (2020a). vq-wav2vec: Self-supervised learn- ing of discrete speech representations. In International Conference on Learning Representations.

- [Baevski et al., 2020b] Baevski, A., Zhou, H., Mohamed, A., and Auli, M. (2020b). wav2vec 2.0: A frame- work for self-supervised learning of speech representations. arXiv:2006.11477.

- [Bapna et al., 2022] Bapna, A., Cherry, C., Zhang, Y., Jia, Y., Johnson, M., Cheng, Y., Khanuja, S., Riesa, J., and Conneau, A. (2022). mSLAM: Massively multilingual joint pre-training for speech and text. arXiv:2202.01374.

- [Bommasani and et. al., 2021] Bommasani, R. and et. al. (2021). On the opportunities and risks of foundation models. arXiv:2108.07258.

- [Chung and Glass, 2020] Chung, Y.-A. and Glass, J. (2020). Generative pre-training for speech with autore- gressive predictive coding. In ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 3497–3501. IEEE.

- [Chung et al., 2021] Chung, Y.-A., Zhang, Y., Han, W., Chiu, C.-C., Qin, J., Pang, R., and Wu, Y. (2021). W2v-bert: Combining contrastive learning and masked language modeling for self-supervised speech pre- training. arXiv:2108.06209.

- [Conneau et al., 2020] Conneau, A., Baevski, A., Collobert, R., Mohamed, A., and Auli, M. (2020). Unsuper- vised cross-lingual representation learning for speech recognition. arXiv:2006.13979.

- [Gutmann and Hyvärinen, 2010] Gutmann, M. and Hyvärinen, A. (2010). Noise-contrastive estimation: A new estimation principle for unnormalized statistical models. In Teh, Y. W. and Titterington, M., editors, Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, volume 9 of Proceedings of Machine Learning Research, pages 297–304, Chia Laguna Resort, Sardinia, Italy. PMLR

- [Harwath et al., 2020] Harwath, D., Hsu, W.-N., and Glass, J. (2020). Learning hierarchical discrete linguistic units from visually-grounded speech. In International Conference on Learning Representations.

- [Harwath et al., 2016] Harwath, D., Torralba, A., and Glass, J. (2016). Unsupervised learning of spoken language with visual context. Advances in Neural Information Processing Systems, 29.

- [Hsu et al., 2021] Hsu, W.-N., Bolte, B., Tsai, Y.-H. H., Lakhotia, K., Salakhutdinov, R., and Mohamed, A. (2021). Hubert: Self-supervised speech representation learning by masked prediction of hidden units. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 29:3451–3460.

- [Hsu et al., 2017] Hsu, W.-N., Zhang, Y., and Glass, J. (2017). Unsupervised learning of disentangled and interpretable representations from sequential data. Advances in neural information processing systems, 30.

- [Iranzo-Sánchez et al., 2019] Iranzo-Sánchez, J., Silvestre-Cerdà, J. A., Jorge, J., Roselló, N., Giménez, A., Sanchis, A., Civera, J., and Juan, A. (2019). Europarl-st: A multilingual corpus for speech translation of parliamentary debates. arXiv:1911.03167.

- [Khuranaetal.,2019] Khurana,S.,Joty,S.R.,Ali,A.,andGlass,J.(2019).Afactorialdeepmarkovmodelfor unsupervised disentangled representation learning from speech. In ICASSP 2019 – 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 6540–6544.

- [Khurana et al., 2022] Khurana, S., Laurent, A., and Glass, J. (2022). Samu-xlsr: Semantically-aligned mul- timodal utterance-level cross-lingual speech representation. IEEE Journal of Selected Topics in Signal Processing, pages 1–13.

- [Khurana et al., 2020] Khurana, S., Laurent, A., Hsu, W.-N., Chorowski, J., Lancucki, A., Marxer, R., and Glass, J. (2020). A convolutional deep markov model for unsupervised speech representation learning. arXiv:2006.02547.

- [Lietal.,2020] Li,X.,Wang,C.,Tang,Y.,Tran,C.,Tang,Y.,Pino,J.,Baevski,A.,Conneau,A.,andAuli,M. (2020). Multilingual speech translation with efficient finetuning of pretrained models. arXiv:2010.12829.

- [Liu et al., 2021a] Liu, A. H., Jin, S., Lai, C.-I. J., Rouditchenko, A., Oliva, A., and Glass, J. (2021a). Cross- modal discrete representation learning. arXiv:2106.05438.

- [Liu et al., 2021b] Liu, A. T., Li, S.-W., and yi Lee, H. (2021b). TERA: Self-supervised learning of trans- former encoder representation for speech. IEEE/ACM Transactions on Audio, Speech, and Language Pro- cessing, 29:2351–2366.

- [Liu et al., 2020] Liu, Y., Gu, J., Goyal, N., Li, X., Edunov, S., Ghazvininejad, M., Lewis, M., and Zettle- moyer, L. (2020). Multilingual denoising pre-training for neural machine translation. Transactions of the Association for Computational Linguistics, 8:726–742.

- [Oord et al., 2018] Oord, A. v. d., Li, Y., and Vinyals, O. (2018). Representation learning with contrastive predictive coding. arXiv:1807.03748.

- [Pascual et al., 2019] Pascual, S., Ravanelli, M., Serrà, J., Bonafonte, A., and Bengio, Y. (2019). Learning problem-agnostic speech representations from multiple self-supervised tasks. arXiv:1904.03416.

- [Rivière et al., 2020] Rivière, M., Joulin, A., Mazaré, P.-E., and Dupoux, E. (2020). Unsupervised pretraining transfers well across languages. arXiv:2002.02848.

- [Schneider et al., 2019] Schneider, S., Baevski, A., Collobert, R., and Auli, M. (2019). wav2vec: Unsuper- vised pre-training for speech recognition. arXiv:1904.05862.

- [Shor et al., 2021] Shor, J., Jansen, A., Han, W., Park, D., and Zhang, Y. (2021). Universal paralinguistic speech representations using self-supervised conformers. arXiv:2110.04621.

- [Sutskever et al., 2014] Sutskever, I., Vinyals, O., and Le, Q. V. (2014). Sequence to sequence learning with neural networks. arXiv:1409.3215.

- [Vaswani et al., 2017] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., and Polosukhin, I. (2017). Attention is all you need. arXiv:1706.03762.

- [Wang et al., 2020] Wang, C., Pino, J., Wu, A., and Gu, J. (2020). Covost: A diverse multilingual speech-to- text translation corpus. arXiv:2002.01320.

- [wen Yang et al., 2021] wen Yang, S., Chi, P.-H., Chuang, Y.-S., Lai, C.-I. J., Lakhotia, K., Lin, Y. Y., Liu, A. T., Shi, J., Chang, X., Lin, G.-T., Huang, T.-H., Tseng, W.-C., tik Lee, K., Liu, D.-R., Huang, Z., Dong, S., Li, S.-W., Watanabe, S., Mohamed, A., and yi Lee, H. (2021). Superb: Speech processing universal performance benchmark. arXiv:2105.01051.

Français

Français